Using Neural Style Transfer to ‘improve’ my painting & drawing

- Context:

- Personal Art Project

- Date:

- Credits:

- Hat-tip to Zack Scholl, he explored something similar in 2017 which inspired me as I got started.

- Launch:

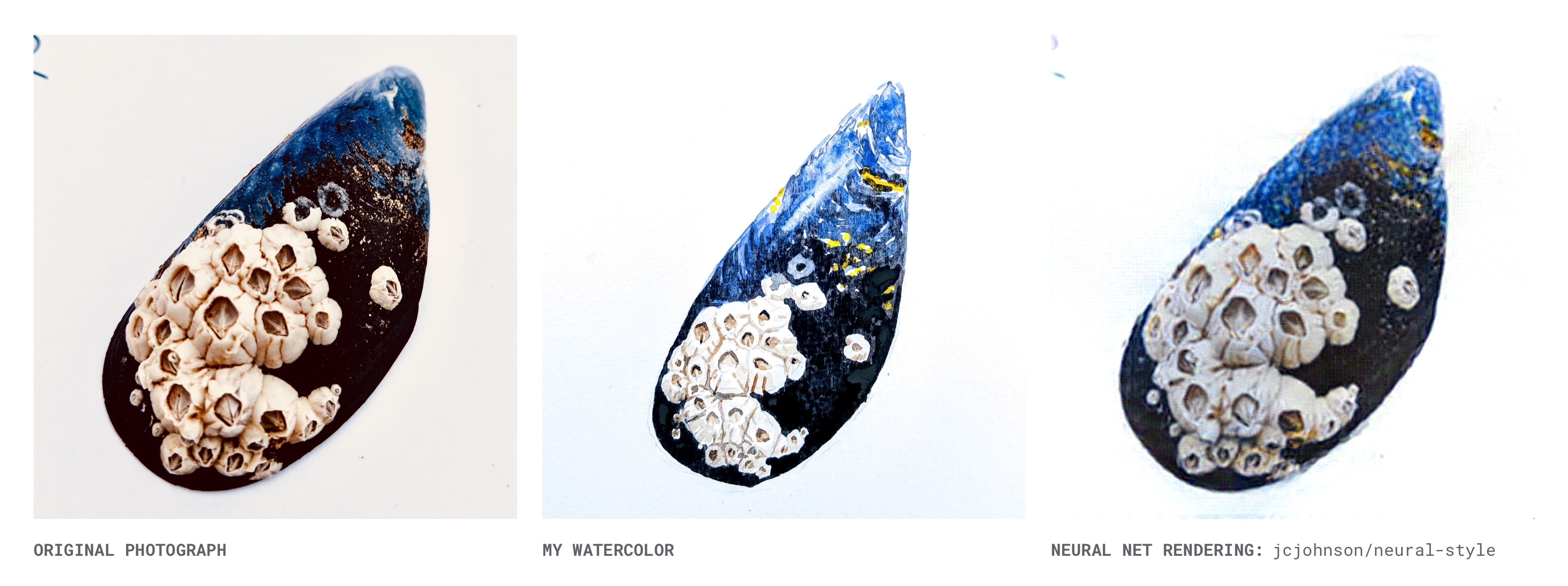

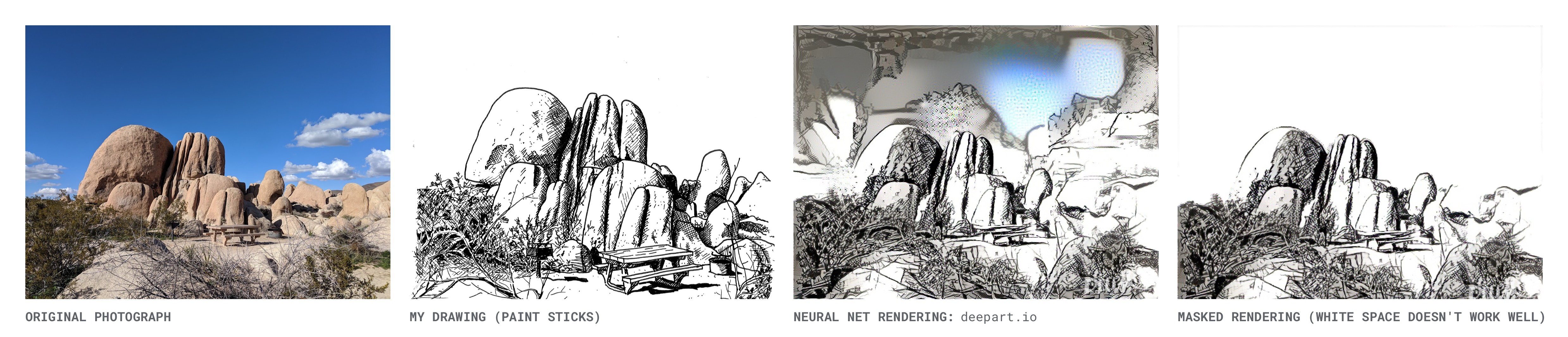

I had some fun this weekend exploring how I might leverage machine learning to ‘improve’ my paintings and drawings. Style transfer involves using the content of one image and re-composing it in the style of another. It exploded onto the internet a few years ago, following a paper by Gatys, Ecker, and Bethge. Justin Johnson has a pretty popular implementation on github, which is what I started with.

Justin Johnson Implementation

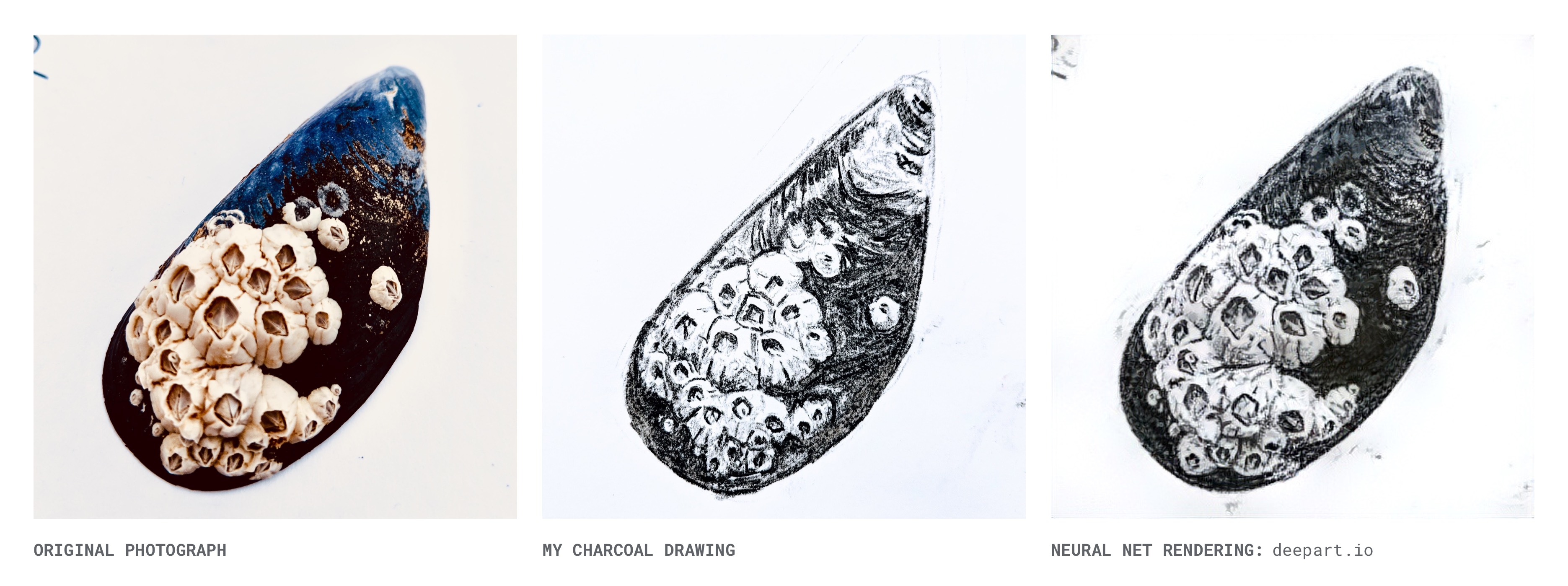

I wasn’t getting great results running things locally. (Although the shell image above was a later attempt which turned out reasonably well.)

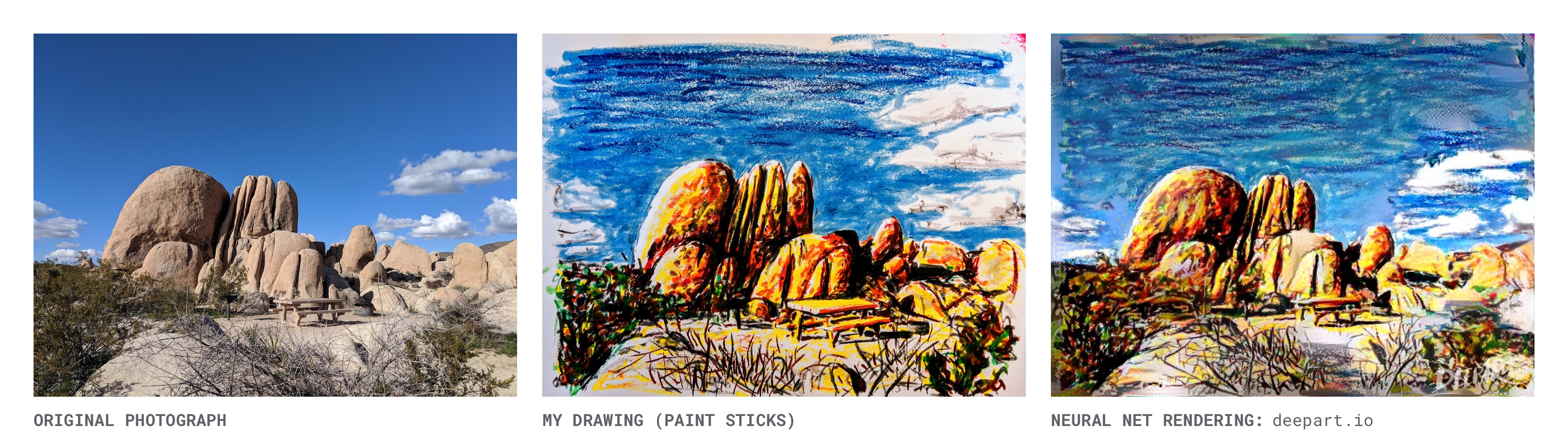

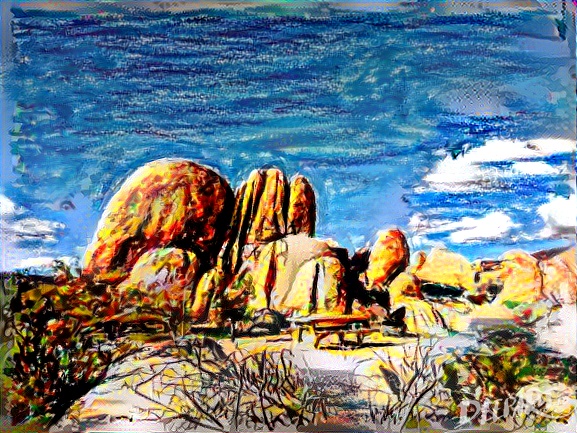

I was running the code on an old laptop, so each took at least an hour to generate. This made iteration and experimentation pretty challenging. I played with a few online implementations os style transfer, and got some pretty great results from deepart.io

Deepart.io

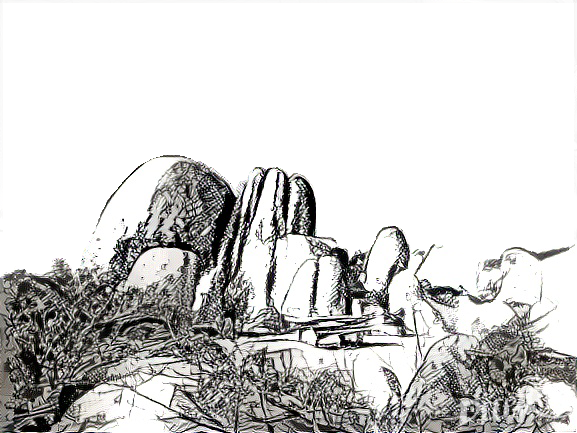

The following pen version isn’t great, but I masked the image after, so perhaps building that into the process would help.

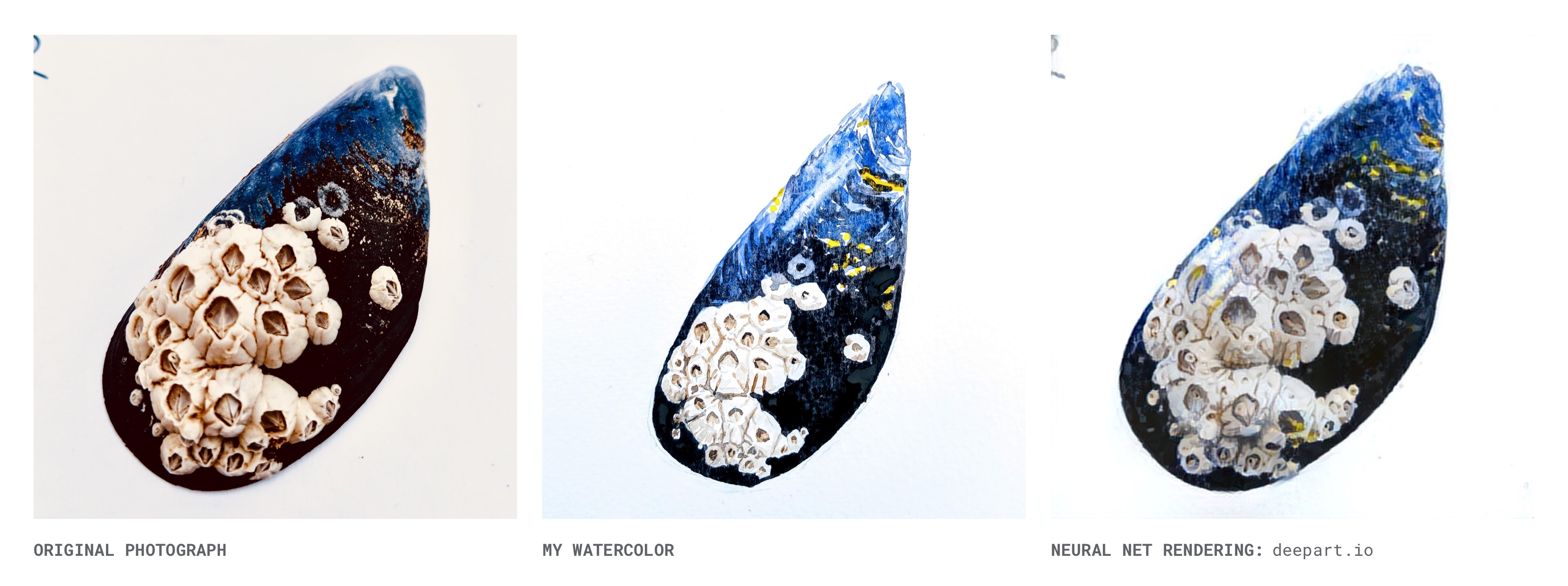

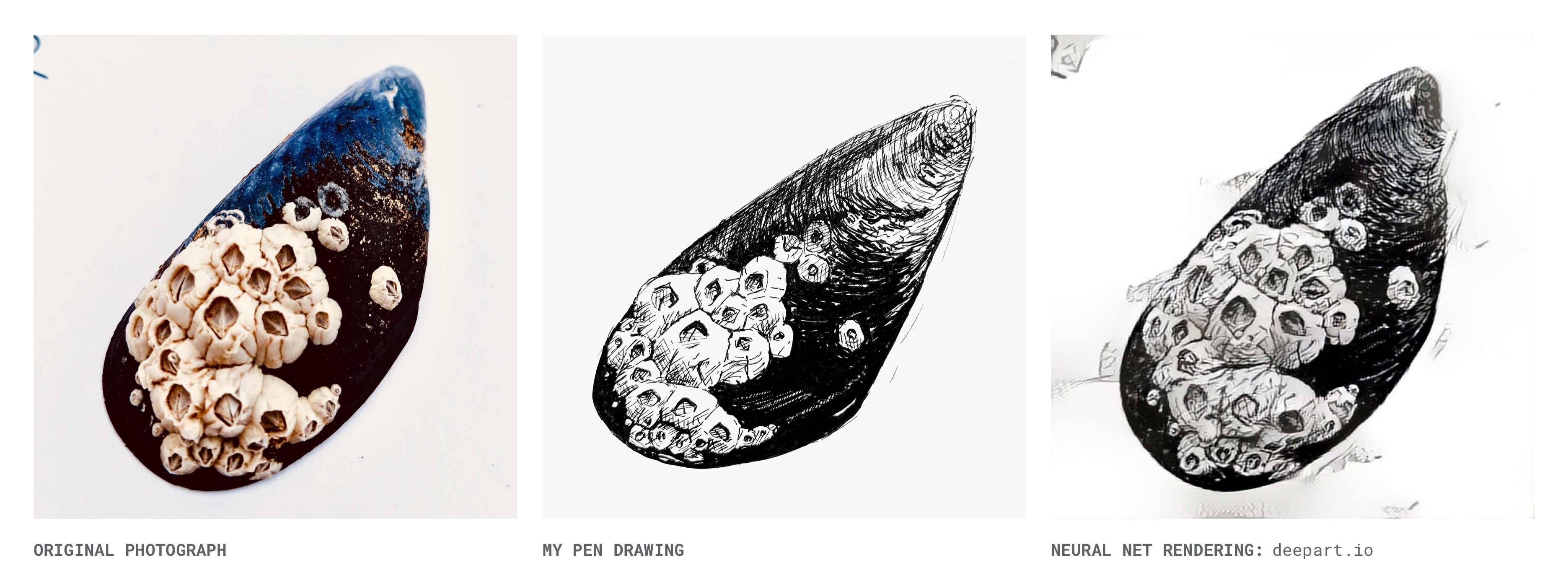

I did some further explorations with a photo of a seashell that I had previously redrawn in different mediums. Deepart does a great job of reproducing each of these:

My favorite

My favorite of these came about when I thought I’d upload a photo of one of my 3-year-old’s paintings and see what the result was like:

Next steps

I’d love to roll-my-own implementation, so that I can really tweak and play with all the parameters. There’s a few TensorFlow implementations which might run a little better on my machine, that I want to try. But what I really need to do is explore a cloud-based solution. Here’s a couple that I’m planning to check out:

This remind’s me that I’ve been meaning to dig into Gene Kogan’s ML for Artists resources, so that will definitely be on the todo list for the next few weeks. I hope to post more here soon.